A Call for Centering Focus on People Harmed By AI

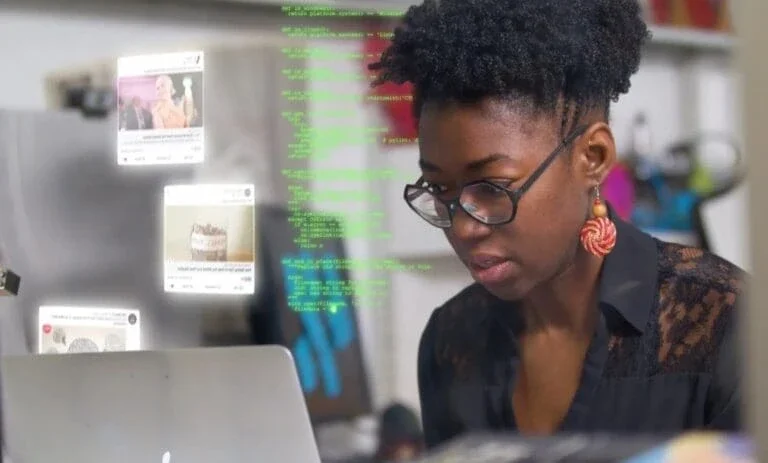

As she dug deeper, Buolmwini discovered two more worrisome facts. First, despite the power of algorithms, neither oversight nor regulations were in place. Secondly, many people didn’t even realize algorithms’ largely hidden control over crucial aspects of their lives.

Now an advocate as well as a computer scientist, Buolamwini founded the Algorithmic Justice League (AJL), a non-profit intended to unmask and fight the harms caused by algorithms. Unchecked, she notes, algorithms threaten our civil rights. AJL calls for regulation, transparency and consent in equitable and accountable uses of artificial intelligence.

Given the newness and nuances of the field, some of those being harmed might not even realize it. The Rockefeller Foundation, as part of its commitment to helping build a more equitable society, is supporting AJL as it sets up the Community Reporting of Algorithmic System Harms (CRASH) project, a new initiative to support communities as they work to prevent, report and seek redress for algorithmic harms. As Buolamwini puts it: “If you have a face, you have a place in this conversation.”

“The CRASH project gives people who have been harmed by algorithmic systems a new way to report and seek redress for those harms. This is a critical tool for reducing suffering today and building a more equitable future,” says Evan Tachovsky, the Foundation’s Director and Lead Data Scientist for Innovation.

So far, CRASH has gathered a team of researchers, community organizers and educators to focus on questions like: “How might companies and organizations build algorithmic systems that are more equitable and accountable? How do people learn that they are being harmed by an Artificial Intelligence (AI) system, and how do they report that harm? How can algorithmic harms be redressed?

A Start at the Bellagio Library

The project traces its roots to a convening at Rockefeller’s Bellagio Center in Italy, which gathers thought leaders in various fields. There, Buolamwini met Camille Francois, a researcher and technologist working on the intersection of technology and human rights. The two along with designer and scholar Dr. Sasha Costanza-Chock are co-leads on the project.

“We were together in the Bellagio library, talking about our respective work and experiences,” Francois recalls, “and the idea of building a platform to apply some of the information security models, like bug bounties, to the algorithmic harms space emerged naturally.” Bug bounties are programs where hackers can report bugs and vulnerabilities and receive recognition and compensation in return.

“Despite working on difficult topics,” Francois remembers, “Joy and I both had a commitment to a sense of optimism, to bringing a playful and participatory approach to these issues when possible. Bellagio played a critical role in this project.”

Costanza-Chock, who is transgender, nonbinary and uses the pronouns of they/them, describes their personal connection to algorithmic justice in their new book with MIT Press, Design Justice, which opens with a story of their passing through airport security. The user interface of the millimeter-wave scanners require TSA officials to press a blue “boy” or pink “girl” button in order to conduct a scan, reinforcing a binary gender and often marking gender non-conforming people as “risky.”

“Like many trans people, I often get flagged going through those machines. And then they do a more invasive search, running their hands over my body parts,” Costanza-Chock says, noting that the system is also often triggered by Black people’s hair, Muslim women’s headwraps and disabled people’s bodies.

People don’t have a clear sense about the ways in which the technology of algorithms is already touching every aspect of their lives. My hope for this project is we empower hackers and tinkerers, as well as everyday people, to expose algorithmic harms and create a new wave of accountability. That would be a big win.

Joy BuolamwiniFounder, Algorithmic Justice League

Early Progress in Shining A Light on Algorithmic Harms

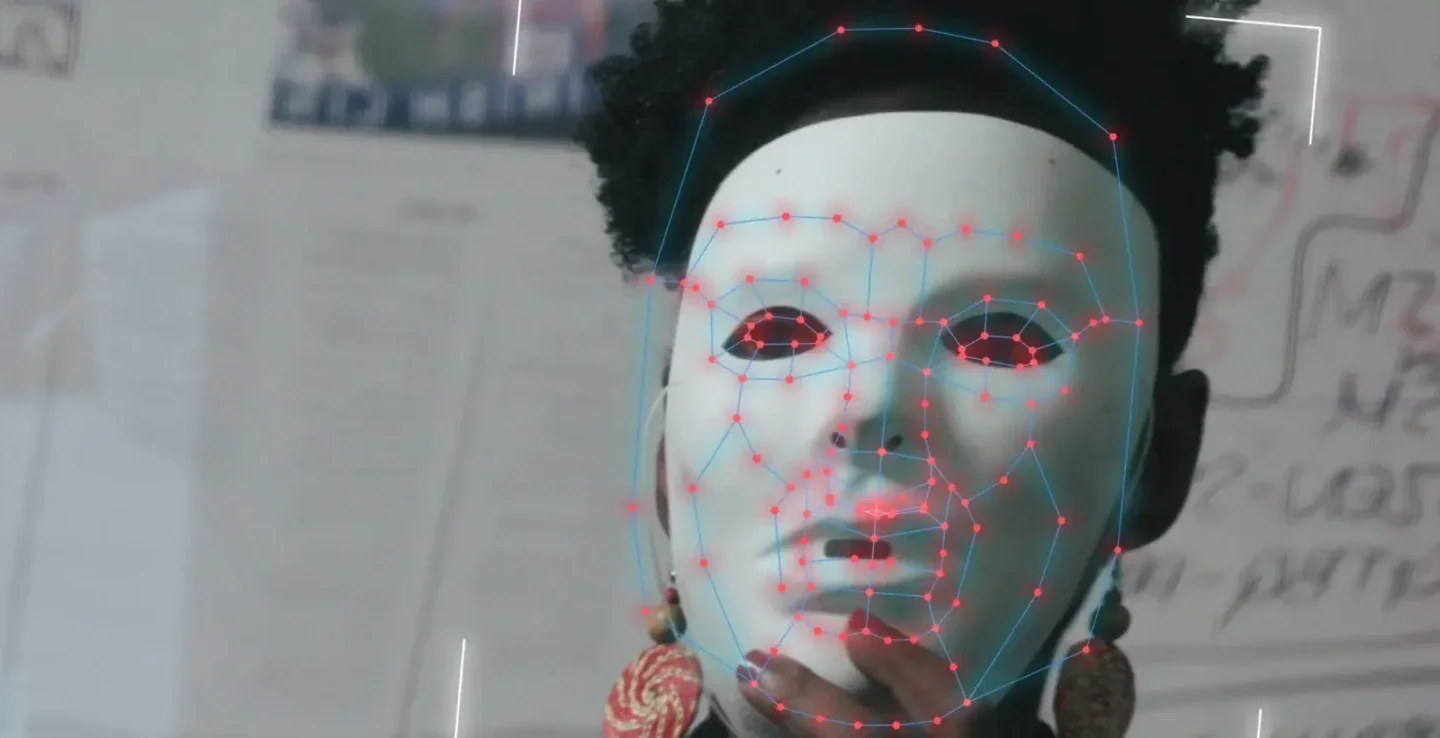

There have also been some early successes. In 2018 and 2019, Buolamwini completed two groundbreaking studies that revealed how systems from Amazon, IBM, Microsoft and others were unable to classify darker female faces as accurate as those of white men. By June 2020, all three companies had announced they would stop or pause their facial recognition products for law enforcement.

Buolamwini was featured in the 2020 documentary Coded Bias, which previewed at the Sundance Festival in January as part of the U.S. Documentary Competition and has contributed to public awareness.

San Francisco, Oakland, Boston and Portland, Oregon have all enacted at least partial bans on the use of facial recognition systems.

At the Boston Public Library, AJL created a very successful “Drag Versus AI” workshop, teaching participants about face surveillance and how to undermine the algorithms by dressing up in drag.

In Brooklyn, Buolamwini helped achieve a successful outcome for tenants of the Atlantic Plaza Towers, rent-stabilized housing units in the city’s Brownsville section, a neighborhood where 70 percent of the population is Black, about 25 percent is Hispanic, and the poverty rate in 2018 was 27.8 percent, compared with 17.3 percent citywide.

Tenants protested the landlord’s decision to install a facial recognition system to replace key fobs and provide entrance to their high-rise buildings. They noted that facial recognition technologies often harm people of color, and added that they did not want themselves or their guests to be tracked. They noted Buolamwini’s work that found some facial analysis software misclassifies Black women 35 percent of the time, compared with just 1 percent of the time for white males. Buolamwini, testifying before Congress in May 2019 about technical limitations of facial recognition technology, drew attention to the Brooklyn tenants.

Six months later, the landlord announced he was shelving the plan.

Buolamwini stresses that she remains a computer nerd and is not opposed to technology. What she wants is a transformation in how algorithmic systems are designed and deployed, and regulations to monitor and restrict the harms they can cause. “We are at a moment when the technology is being rapidly adopted and there are no safeguards,” she says. “It is, in essence, a wild, wild West.”

Related Updates

Forget the New Normal: Let’s Head to an Inclusive Future

In this Matter of Impact, we highlight how The Rockefeller Foundation and its grantees are reimagining a more inclusive future by championing worker-owned companies, using food as medicine, and advancing inclusive technology and unbiased A.I. to build a sustainable, just post-Covid recovery.

More